Sampling Resolution, Variogram Identifiability, and Matérn Spectral Structure

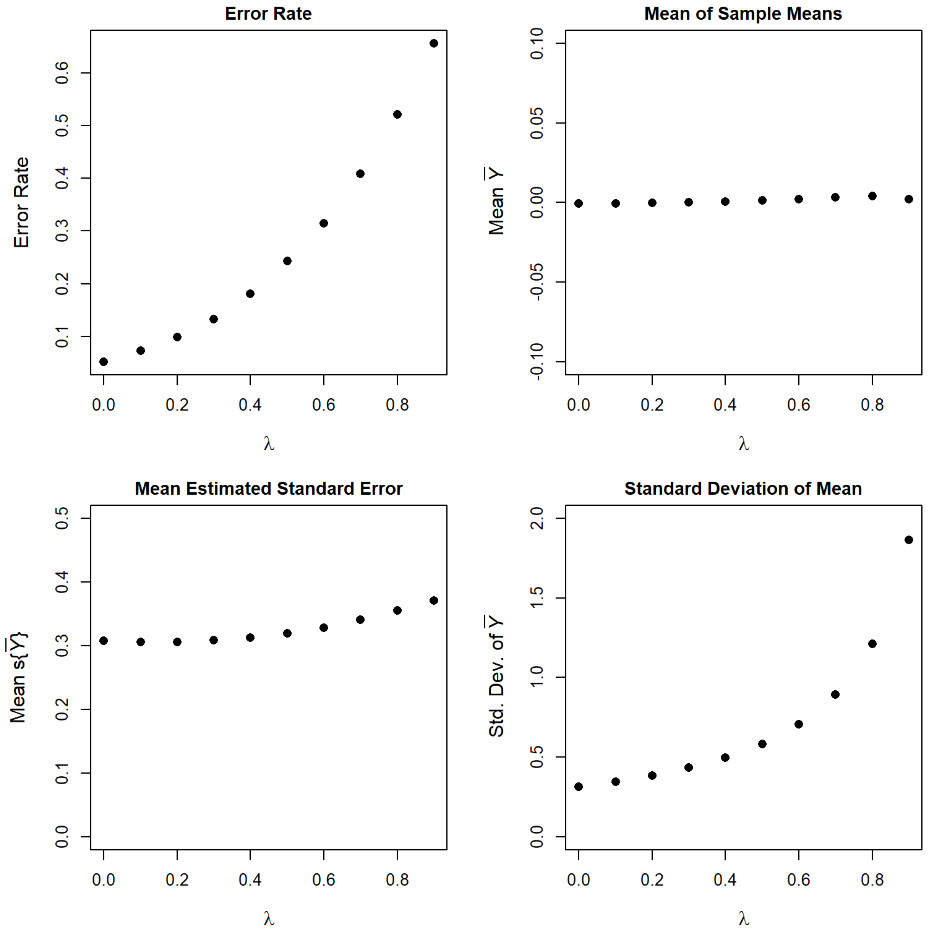

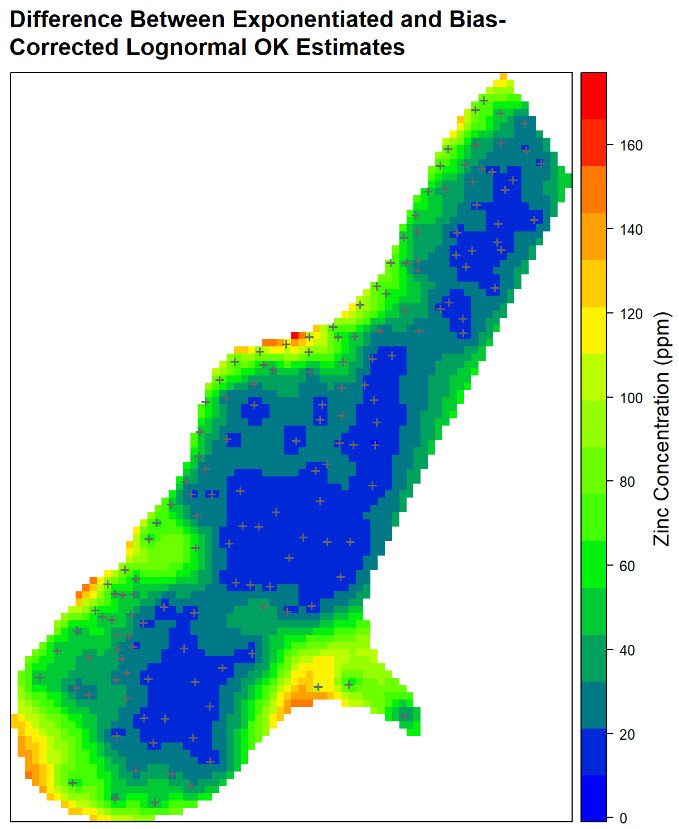

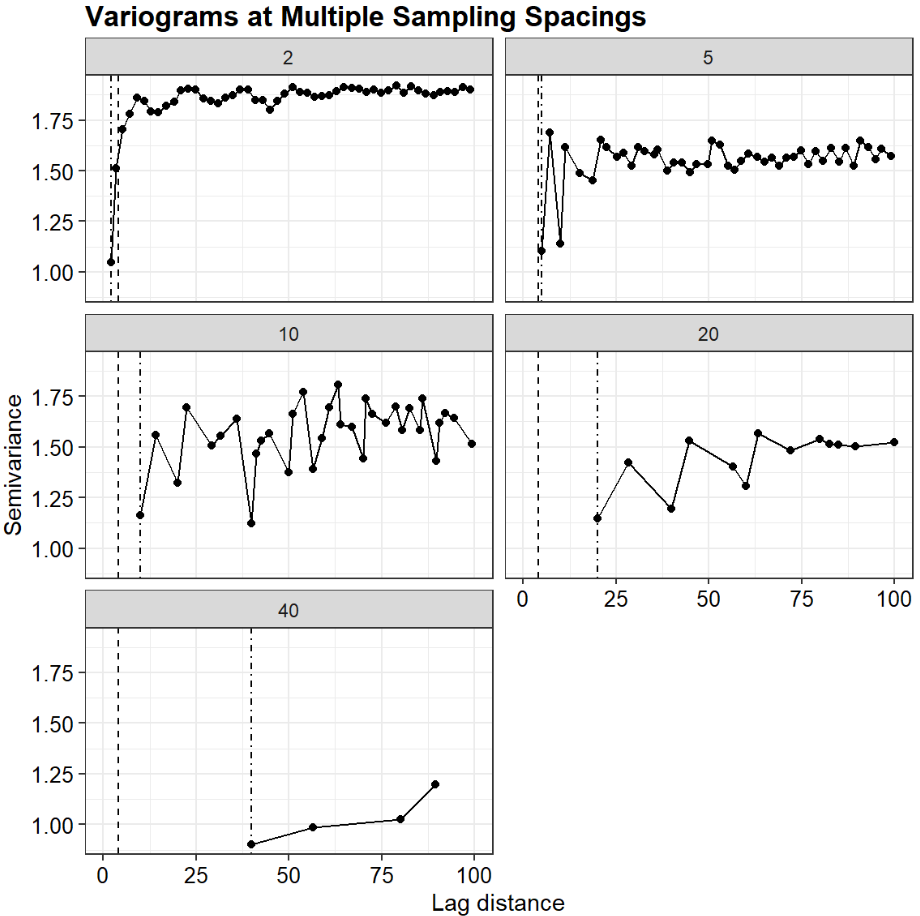

This paper examines how sampling resolution constrains variogram identifiability, showing that spatial variability occurring at scales smaller than the sampling interval cannot be resolved and is instead absorbed into the nugget effect. Using a spectral representation of stationary random fields and the Matern covariance family, the analysis formally demonstrates how unresolved micro-scale variability inflates the nugget term and alters empirical variogram structure. The results emphasize that variogram interpretation and sampling design must be aligned with plausible spatial scales of variability to support defensible environmental decision-making.